How I Taught AI to Make Memes 🤖

On meme generation with Claude Sonnet 3.5 before end-to-end image generation was a thing

Disclaimer: All memes used in this post are automatically generated.

I’ve tried generating memes for specific queries with LLMs several times over the last years. In 2023, you could already do it fairly successfully. There were two problems:

The models were text-only, generating captions for existing images and videos.

Memes were not funny.

The first breaking point was Claude 3.5 Sonnet, especially for all the languages other than English. It finally started to generate some funny memes, surprisingly solving the second problem. However, some infrastructure was still necessary, such as collecting annotated templates and code to process them.

The second breaking point is happening right now, with 4o image generation and Gemini 2.0 Flash native image generation. Now, the whole process is end-to-end, and models are generating both images/videos and captions, solving the first problem too. Of course, these new systems are very far from perfect (or even good), but they can imitate some templates and place coherent captions.

This post is somewhat obsolete in that sense. I will describe the system I used to generate memes before we got end-to-end solutions. I initially developed it for a competition for AI meme generation. The task was:

Develop a system that implements a publicly accessible API method that accepts a JSON request and returns a JSON response. The request will contain a textual prompt from a user, and the response should include a link to a JPG image or MP4 video. The media element should not contain watermarks or other identifying marks. The method should respond within 15 seconds.

Solution TL;DR

Memegen for image templates

Video templates with a single caption format

Claude 3.5 Sonnet as a model

Random choice of 4 video templates and 2 image templates

2 few-shots for each template and a textual description

3 final meme candidates with a CoT-like selection

Templates

The whole system revolves around meme templates. There are two types of templates: images and videos. For every meme template, I had to do the following things:

Find the template. Sources of templates: Memegen itself, databases such as Know Your Meme, and Telegram channels with templates.

Configure the template for Memegen or video templates. It usually includes some markup for caption positions.

Describe the template for a language model. Some language models allow passing images directly, but when there are 10+ of them, they will take many more tokens than textual descriptions.

Memegen

The core of the system is the good old Memegen. It is an open-source service that allows captions to be placed on top of existing image templates. It doesn’t support MP4 videos; otherwise, it is perfect for our needs. It even has a pre-defined template collection!

However, there is one problem. According to the task description, the website forces a watermark, which is forbidden. I’ve used a self-hosted version of the same service to overcome this. It also allowed me to add new meme templates. The configuration of a template is written in YAML and is fairly simple. You have to define positions for captions and their font. Like this:

name: Chill Guy

text:

- style: upper

color: white

font: thick

anchor_x: 0.0

anchor_y: 0.0

angle: 0.0

scale_x: 1.0

scale_y: 0.2

align: center

start: 0.0

stop: 1.0

- style: upper

color: white

font: thick

anchor_x: 0.0

anchor_y: 0.8

angle: 0.0

scale_x: 1.0

scale_y: 0.2

align: center

start: 0.0

stop: 1.0

example:

- Me when I get a low SAT score but I remember

- I'm a chill guy who low-key doesn't GAF

overlay:

- center_x: 0.5

center_y: 0.5

angle: 0.0

scale: 0.25Video templates

For the video templates, I used Moviepy and FFmpeg to add a single caption with a black background to the top of a video. Although it lacks flexibility, it at least allows using some videos as templates.

Pipeline options

The main problem is the 15-second restriction. This cuts off some curious multi-step pipelines and test-time compute experiments.

The simplest option is to use a language model to use a single prompt with the whole library of templates and a query as a context. There are two problems. First, the library may be too large to fit into the context. Second, it will make generating much slower. The more tokens we give to the model, the slower it generates a completion.

I’ve also tried a pipeline with two steps. First, the LLM call sends the whole library but asks only to generate a single index of a template. Because the output length is tiny, it doesn’t require much time. The second LLM call uses this selected template and generates tailored captions. The main problem of this pipeline is that language models tend to select the most popular templates instead of query-specific templates.

One can also replace the first step with semantic retrieval or just a random selection of templates. In fact, the latter is not that bad since it forces the language model to come up with jokes for unsuitable templates, which makes memes even funnier.

Backend

FastAPI and SQLite—nothing fancy. The only problem is the number of saved images and videos, so I must manually clean the old ones regularly. I guess this can be resolved with a proper TTL.

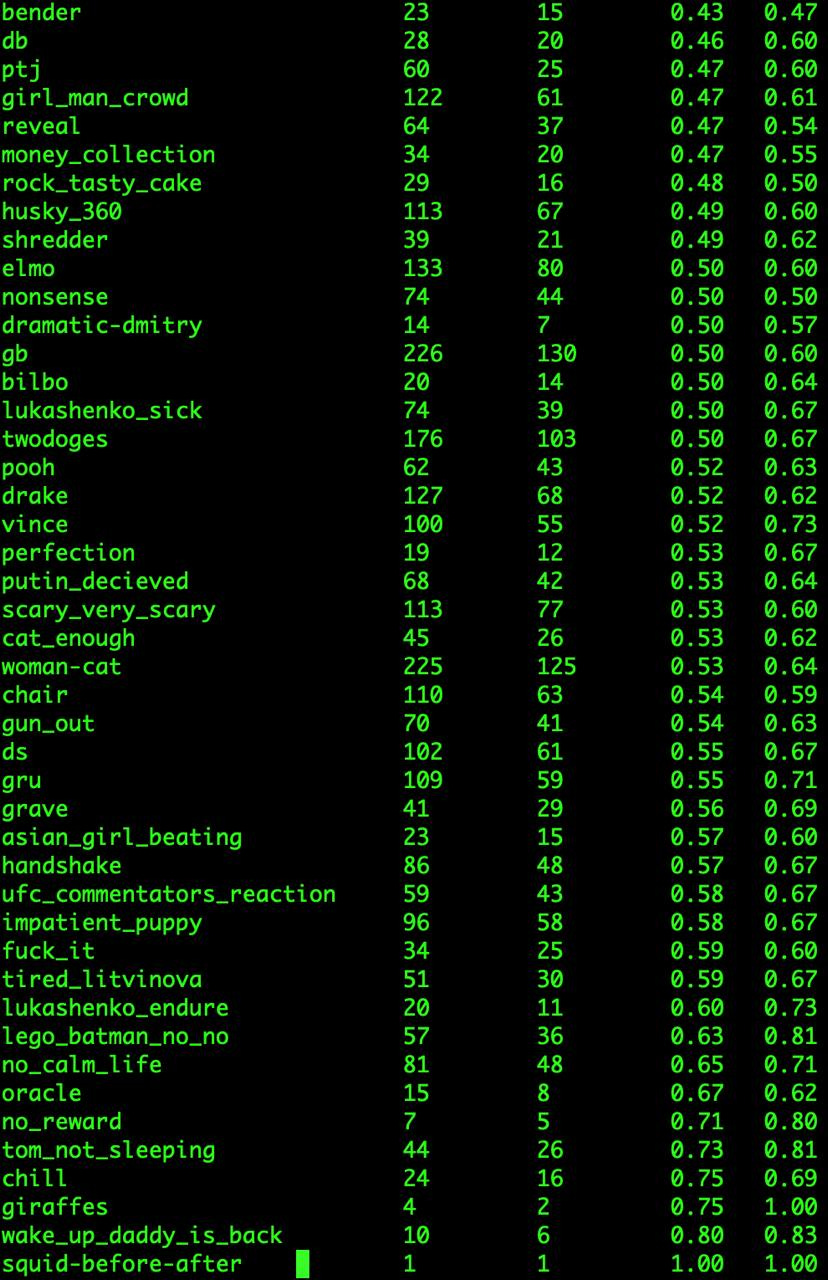

Online statistics

Some templates are inherently funnier than others. Since we have real user statistics, we can use them to clean all the bad templates and use only the proven good ones.

Conclusion

Overall, it was a fun experience, but I look forward to the end-to-end meme era, which will elevate AI-generated memes to the next level with native image generation.

Meanwhile, AI Meme Arena is already up and running, with over 8,000 memes so far. Jump in and try it for yourself, or if you want to add your own Memegent, feel free to use my code as a starting point for this spec.

Repo with all the code: https://github.com/IlyaGusev/memetron3000

Join AI Meme Arena: https://discord.gg/aimemearena